One of the most intimidating challenges in machine learning is going from proof of concept to production-ready application. All too often, the result is that an ML model performing brilliantly in experimental settings falls short in real-world applications. Only 32% of the data scientists surveyed said their ML models usually deploy successfully. This thus underlines the requirement for a more structured approach.

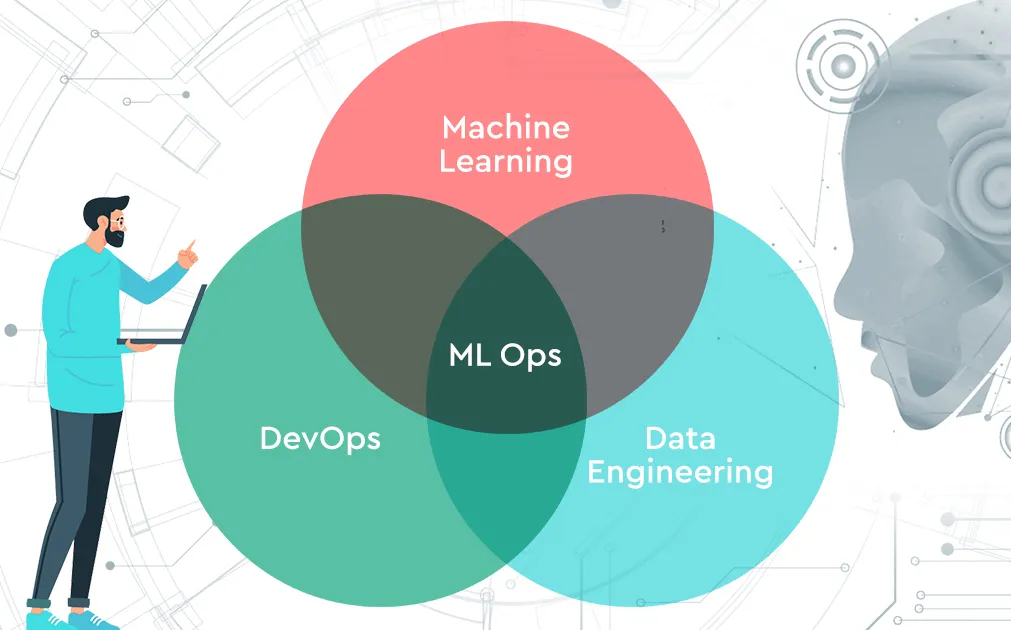

This is where machine learning operations, or MLOps, come in handy.

Machine learning operations have played a pivotal role in reinventing the way we approach machine learning development. So what is MLOps, and why do we need it?

Firstly, the article explores MLOps; moreover, it provides a clear definition. In addition, it outlines key components. Finally, it emphasizes the importance of its implementation.

In the meantime, inclining toward ITRex’s MLOps direction to associations, subsequently opening the way to a flood of information — meanwhile finding more about MLOps prospects other than exploring the huge expected in your space, at last getting a more critical discernment, as such enrapturing you to outfit the force of MLOps thusly, consequently changing your endeavor.

What is MLOps?

You can encounter a wide variety of MLOps definitions on the web. At ITRex, we define MLOps as follows.

MLOps converge information science, machine learning, and programming to synergistically develop, subsequently deploy, concurrently monitor, and continuously track models operating in production, thereby facilitating a seamless fusion of disciplines to streamline the AI lifecycle.

MLOps incorporates flawlessly all through the AI lifecycle, from information assortment to display retraining, guaranteeing smooth changes and continuous model turn of events and organization

I have worked hard to keep the main idea and points in while shortening this text. Let me know if you would like me to make any further adjustments!

Key components of MLOps

What is MLOps in terms of its key elements? While there may be more, the following are the most crucial components of MLOps that work together to streamline the end-to-end process of deploying and maintaining machine learning models, ensuring reliability, scalability, and efficiency:

Collaboration Of MLOps

MLOps helps teams integrate and build faster and more scalable machine learning models, without the siloes and disjointedness typical of traditional ML projects.

I have compressed this key thought into one sentence, which shows that MLOps enables collaboration to be effective and revolutionizes ML model development. Feel free to ask if you need further adjustments.

Automation about MLOps

In the interim, MLOps effectively robotizes each step of the ML work process to ensure repeatability, consistency, and versatility. Subsequently, changes to information and model preparation code, schedule occasions, messages, and observing occasions generally trigger robotized model preparation and organization. Besides, robotized reproducibility drives MLOps, guaranteeing model exactness, detectability, and solidness of the AI arrangement over the long haul. Furthermore, this proactive methodology works with consistent model updates and refinements. Eventually, MLOps effectively guarantees the unwavering quality and execution of AI arrangements.

CI/CD

MLOps borrows continuous integration and deployment techniques that help in extending collaboration between data scientists and machine learning developers to help speed up the development and production of ML models.

Version Control of MLOps

A large number of events may change the data, code base, or anomaly in a machine-learning model. There is a code review phase for each ML training code or model specification; each is versioned. Version Control: This is an important facet of MLOps that is used to track and save different versions of the model. This makes reproducing results and reverting to any previous version easy in case any problem arises.

Real-time model monitoring

The job does not end once we put a machine-learning model into use. MLOps allows organizations to track and continuously evaluate the performance and behavior of machine learning models in production environments. Real-time model monitoring facilitates prompt problem detection and rectification, ensuring the model’s effectiveness and accuracy over time.

Scalability

There are various ways in which MLOps contributes to scalability. One way is through the automation of ML pipelines. That way, less manual intervention is required, so scaling can be quicker and more reliable in ML operations. Another way that MLOps realizes scalability is through continuous integration/continuous deployment techniques. CI/CD pipelines automate testing and release, accelerating time-to-market and scaling of machine learning solutions.

Compliance

MLOps ensures that machine learning models are created and deployed in an open, auditable manner and adhere to rigorous standards. Furthermore, MLOps can aid in improving model control, guaranteeing proper and ethical conduct, and preventing bias and hallucinations.

Why do we need MLOps?

The broad answer to the question “What is MLOps and why do we need it?” can be outlined as follows. Taking machine learning models to production is no mean feat. The machine learning lifecycle consists of many complex phases and requires cross-functional team collaboration. Maintaining synchronization and coordination between all of these processes is a time and resource-consuming task. Thus, we need some standardized practices that could guide and streamline all processes across the ML lifecycle, remove friction from ML lifecycle management, and accelerate release velocity to translate an ML initiative into ROI.

More on this can be explained by looking at some of the main reasons why organizations need MLOps.

1. Poor performance of the ML models in production environments

Meanwhile, several factors contribute to the failure of ML models in production, subsequently stemming from data mismatch, model complexity, overfitting, concept drift, and operational problems, which ultimately culminate in principal obstacles. Consequently, operational problems encompass technical difficulties related to deploying and running models in dynamic environments, including compatibility, latency, scalability, reliability, security, and compliance issues. Furthermore, a model intended to collaborate with other systems, components, or users, and process variable workloads, requests, or failures, will inevitably behave differently in a real-world production environment, whereas, in contrast, it would perform in a controlled, isolated setting. Additionally, this disparity highlights the need for robust testing and validation. Ultimately, understanding these challenges is crucial for ensuring ML model success.

Meanwhile, the solution to these challenges lies in meticulous model selection, subsequently followed by reliable training procedures, concurrently complemented by continuous monitoring, and ultimately culminating in extremely close collaboration between data scientists, ML engineers, and domain experts. Furthermore, MLOps emerges as the newest field, specifically designed to preemptively address and tackle these problems, thereby introducing strict, automated monitoring throughout the entire pipeline, sequentially spanning from data collection, processing, and cleaning, subsequently proceeding to model training, generating predictions, assessing model performance, transferring model output to other systems, and finally logging model and data versions. Consequently, this comprehensive approach ensures seamless model development and deployment.

2. Not enough collaboration between data science and IT teams

Deployment of ML models to production is conventionally done in a fragmented manner. A model developed by data scientists is handed over to some operations team who then deploys it. This handoff often creates bottlenecks and other related problems due to the high complexity of the algorithms or because of a mismatch among settings, tools, or goals.

MLOps collaborates in the domain expertise that is isolated and brings it together, reducing the frequency and intensity of such problems. This improves efficiency during the development, testing, monitoring, and deployment of machine learning models.

3. Inability to scale the ML solution beyond PoC:

Meanwhile, the demand for systems to extract business insights from large datasets is increasingly escalating, subsequently fueling the need for machine learning systems to dynamically adapt to evolving data types, concurrently scale with exponentially growing volumes of data, and ultimately reliably generate results that are exceptionally accurate in the presence of uncertainties that inevitably accompany live data. Furthermore, this surge in demand necessitates the development of robust machine learning systems that can seamlessly transition from experimental phases to production environments, thereby ensuring uninterrupted business intelligence.

This may result from different teams working in isolated corners on an ML project. This kind of siloed initiative is pretty difficult to scale beyond a proof of concept, while some vital operational elements are overlooked. MLOps defines standardized instrumentation and best practices that entail culture and defined, repeatable actions to address all elements involved in the ML lifecycle. This is toward ensuring the reliable, quick, continuous production of scaling models.

4. High repetitive task load across the ML lifecycle

The approach of MLOps shortens the ML development lifecycle and improves model stability with the help of automation of many repetitive processes in data science and engineering workflows. It can further empower distributed teams to use time for more strategic and agile management of ML models, focusing on high-order problems in the business thanks to automation that eliminates the repeat of actions countless times within the ML development lifecycle.

5. Better Time-to-Market and Cost Reductions

A standard machine-learning pipeline comprises phases such as data collection, pre-processing, model training, assessment, and deployment. Traditional manual approaches very often build inefficiencies in every step; hence, they are time-consuming and labor-intensive. Fragmented processes and poor communication disrupt ML model deployment, causing complications, version-control issues, and inefficiencies that hinder smooth execution.

Two major advantages of automating model creation and deployment using MLOps are reduced operational expenditure and expedited time-to-market. The goal of the newly emerging area of MLOps is to give the ML lifecycle speed and agility. With MLOps, ML development cycles become shorter, and deployment velocity rises. Effective resource management, in turn, leads to significant cost reductions and faster time-to-value.

Significant Level Arrangement of Carrying out MLOps in an Association

Carrying out into an association requires a few moves toward empowering consistent changes into a more robotized and effective AI work process. Here is the ITRex specialists’ significant level arrangement on executing MLOps inside an association:

1. Appraisal and arranging: MLOps

- Issue definition: recognize the issue to be settled by computer-based intelligence.

- Set clear targets and grade your ongoing MLOps development.

- Guarantee coordinated effort across capabilities between your information science and IT groups, obviously characterizing jobs and obligations

2. Building a strong information pipeline: MLOps

- Dependable and adaptable arrangement for ingesting information from particular sources, handling it, and preparing it

- Execute information forming and heredity following for reproducibility and straightforwardness

- Robotize quality affirmation and information approval to guarantee rightness and dependability in information

3. Framework set up:

- Whether to construct, purchase, or go mixture for MLOps foundation;

- Which MLOps Stage or Structure to pick in light of the necessities, inclinations, and existing framework of the association?

- One can utilize completely overseen start-to-finish cloud administrations like Amazon SageMaker

- Google Cloud ML, or Purplish blue ML with valuable elements of auto-scaling and calculation-specific highlights like auto-tuning of hyper-boundaries, simplicity of arrangement with moving updates, checking dashboards and the sky is the limit from there.

- Set up the necessary infrastructures for preparing ML models and following model preparation stages

4. Work with Model Turn of events

- Use adaptation control frameworks — for instance, Git — and carry out code variant control and model rendition control arrangements

- Use containerization — for instance, Docker — to guarantee consistency and reproducibility of model preparation conditions

- Computerize model preparation and assessment pipelines for nonstop mix and conveyance

5. Observing of the Model

- Set up exhaustive well-being checking of the framework, information float, and model execution

- Characterize key measurements to quantify the nature of the model.

- Screen the model exhibition with alarm and warning systems that will tell partners through apparatuses if any issues or oddities emerge.

6. Guarantee model administration and consistency:

- Give strategies for identifying inclination, assessing decency, and evaluating model gamble

- Lay out severe access controls and review trails for touchy information and model antiques.

- Guarantee consistency with industry and area explicit administrative prerequisites and security rules by safeguarding information and models from security dangers (through access control, encryption, and standard security reviews)

7. Computerize model sending:

- Take on a containerized or serverless way to deal with conveying and serving your models

- Select a compelling model organization system (clump, constant, etc.)

- Design CI/Cd pipelines with computerized testing, coordination of information and code updates, and programmed organization of ML models into the creation of climate

8. Screen and keep up with:

- Refine MLOps rehearses and layout criticism circles for ceaseless model enhancement

- Carry out computerized apparatuses for model retraining given new information or set off by model corruption or float; the equivalent goes for hyperparameter tuning and model execution evaluation

Why collaborate with a MLOps organization?

There are many benefits and advantages to partnering with an MLOps company to successfully implement MLOps practices within any organization. Let us outline the most common ones:

Specialized knowledge:

Fully independent MLOps companies bring access to a team experienced in machine learning, software engineering, data engineering, and cloud computing across a vast array of industries and use cases that can help bring real insight and best practices into play for your special needs.

Faster implementation

MLOps experts can fast-track the adoption of MLOps methodologies for you by providing tried and tested frameworks, tools, and processes to make ML implementation happen. They will draw upon established process playbooks on roadmap creation, setting goals, assessing the current state of your company, and executing ML implementation plans effectively.

Avoid common pitfalls

Notably, there are challenges associated with the MLOps adoption process. Professionals experienced in MLOps will be able to indicate pitfalls, show a way through complex technical landscapes, and adopt proactive measures against issues to help reduce the risks associated with the implementation of MLOps practices.

Best in class Devices and Advancements

Given the immense range of devices and stages being used at various phases of the AI lifecycle, this innovation scene might be incredibly overpowering to explore for any association. The MLOps engineers will assist your business with exploring this labyrinth and suggest — significantly less convey — state-of-the-art arrangements that in any case may not be promptly accessible or available to your association.

Customized approach

Companies can tailor their offering to your company’s special needs, objectives, and limitations. They can evaluate your current workflows, infrastructure, and skill sets to create solutions that are specifically tailored to business needs and objectives.

Meanwhile, at ITRex, we seamlessly empower organizations to effortlessly harness the full potential of ML models, subsequently leveraging our MLOps team’s unique blend of technological expertise and business acumen to craft an iterative, more structured ML workflow.

Furthermore, our extensive proficiency in all AI domains, concurrently spanning classic ML, deep learning, and generative AI, ultimately culminates in a robust data team and internal R&D department, thereby enabling us to build, deploy, and scale AI solutions that generate tangible value, consequently translating into substantial ROI.

Further on, MLOps specialists cultivated a virtual redirection of Goliath’s live stream content control by empowering an ML instrument and applying the best acts of MLOps to robotize and speed up man-made understanding affiliation.

Our ML/AI engineers developed a computer vision model for live stream analysis, and MLOps engineers optimized it on a GPU to enhance throughput. Go to the case study page to learn about the results of the project.

Key takeaways

- MLOps definition refers to a set of practices for collaboration and interaction between data scientists and operations teams, designed to enhance the quality, optimize the ML lifecycle management process, and automate and scale the deployment of machine learning in large-scale production environments.

- Putting ML models into wide-scale production requires a standardized and repeatable approach to machine learning operationalization.

- MLOps includes essential components that are key to successful ML project implementation and also help answer the question “What is MLOps and why do we need it?”. These are collaboration, automation, CI/CD, version control, real-time model monitoring, scalability, and compliance

- The key justifications for why MLOps is significant and why associations ought to anticipate taking on it are horrible showing for creation climate, inadequate joint effort between information science and tasks groups, failure to scale ML answers for big business creation, a plenty of tedious undertakings in the ML lifecycle, slow turn of events and delivery cycles, and unreasonable expenses.

- Recruiting MLOps specialists implies gaining admittance to particular information, the most recent devices and advances, diminishing the dangers related to executing MLOps works, speeding up the sending of ML models, getting master help custom-made to your business needs, and accomplishing quicker returns on computer-based intelligence/ML speculations.

1 thought on “What is MLOps, and why do we need it?”